The truth about the cloud

Welcome to Cloud 101!

In this 5-part series, I’ll give you some deep insight into what the Cloud really is, what it’s not, its true intent, how it can provide value to your organization and along the way help dust away the most common misconceptions. By the time this series is over, my hope is that you’ll understand enough about the Cloud so that you can see the true value where it exists – and where it doesn’t. For your organization to succeed with Cloud initiatives, you need more than just a basic understanding, but alas, that’s always the best place to start. So, we’ll start there, and as each new revelatory article is posted, we’ll be able to progress together from Cloud newbie to Cloud expert.

How Did We Get Here?

Depending on who you ask, the time of day, and which Cloud provider’s service happens to be unavailable at the time, the definition of the Cloud changes dramatically. For some, the Cloud is a place, meaning it’s a physical datacenter out there somewhere. Somewhere across the Internet, in an undisclosed location, the Cloud sits on physical servers complete with fluttering LEDs and noisy power supply fans. For those with better imaginations, the Cloud brings more mythical proportions, idealized in The Empire Strikes Back as the cloud city of Bespin where Han Solo sought refuge with his old friend, Lando Calrissian, and was later seemingly double-crossed and frozen in carbonite.

But, in truth, the Cloud is neither. Yes, there is a physical attribute to the Cloud. The software that powers the Cloud must be run on physical hardware, but it’s more than that.

Let’s jump into a history lesson.

The Cloud actually got its start due to Virtualization. Virtualization, of course, is the virtualizing of something. That something in the technology world has evolved over time and can mean virtualized hardware, software, operating system, storage device, network, memory, data, or even entire computer desktops.

EXTRA: Did you know? Virtualization is not new. The term was actually first coined in the 1960s during the mainframe era. The idea was very similar to what it is today and the intent then was to logically divide mainframe resources to better serve the different applications in a more efficient manner.

Virtualization is now one of the most popular aspects of datacenter computing. It allows organizations to run many different applications and services on a single piece of hardware. In the past, when the organization needed to run a new application or service, IT had to source a single server. Each new application required its own server. Now, with Virtualization, IT can source one single, beefy server with adequate resources (RAM, disk, and processor) to run many applications at once. Through Virtualization, each application is given its own virtual server instance to run inside, separating it from the other applications also running on the same hardware.

Obviously, this has many benefits. First off, the datacenter can take up less space because of server consolidation. Using less space and a smaller number of servers relates to savings in power consumption and electricity costs. But, more than anything it means a true cost savings in hardware. Where, in the past, thousands of dollars in server hardware was required, now the datacenter can run efficiently on a shoestring budget.

The Virtualization Problem

As I stated, the Cloud got its start from Virtualization. At its core, the Cloud is a highly virtualized environment. But, it seems, with every great innovation there’s always some drawback. As Virtualization became more popular, the technologies improved, and vendors innovated, Virtualization was found to have its own unique problems. Because Virtual Machines (VMs) were so easy to spin-up, IT started throwing them out like candy. If someone needed access to a specific application or service, IT would click a button and give almost immediate access.

As time progressed, these VMs were as common as sticky fingers on donut day. And, because they were so easy to spin-up, and the server hardware was sourced to handle it, the VMs sat running forever. VMs left to run on their own continue to consume server resources and electricity, coming close to eliminating any savings achieved through Virtualization in the first place.

Because IT had no idea these VMs were still running and continually consuming all server resources, it was just assumed that these new, beefy servers had just run out of environment space. All IT needed to do was spin-down some of the VMs that were no longer being utilized to reclaim environment space and restore resources needed to run a new VM. But, without proper inventory and not knowing which VMs were still actually in use, they were left to source new servers to accommodate requests. So, the values of Virtualization, less hardware and cost savings, were immediately erased and the datacenter began to grow again.

These “Zombie” VMs also introduced another problem: security. When a new VM was requested it was for a specific purpose, giving access to special applications and data. When VMs are left running long past the required date, they remain available for access, potentially by those without proper authorization to do so. Anyone in the organization would have the opportunity to access that restricted data, and conceivably any employee that ever worked at the organization as long as they remembered the access credentials. Imagine an employee that left the organization under adverse circumstances still being able to access data covertly.

What the Cloud Really Is

So, we know that Virtualization is hugely valuable, but we can now also see that it has its drawbacks if not managed correctly. For a single person, or a single team, to be able to manage 100’s, maybe 1000’s, of Virtual environments is near impossible. Fortunately, the birth of today’s Cloud helps solve that.

So far, we know that the Cloud is a highly virtualized environment running on hardware in a datacenter. So, let’s put the final pieces together. Because Virtualization still offers enormous value, technology must evolve to fix the problems – and it has.

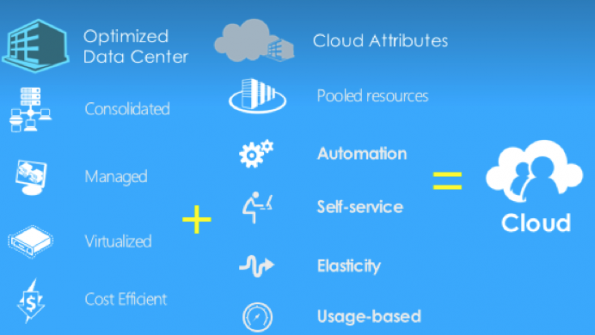

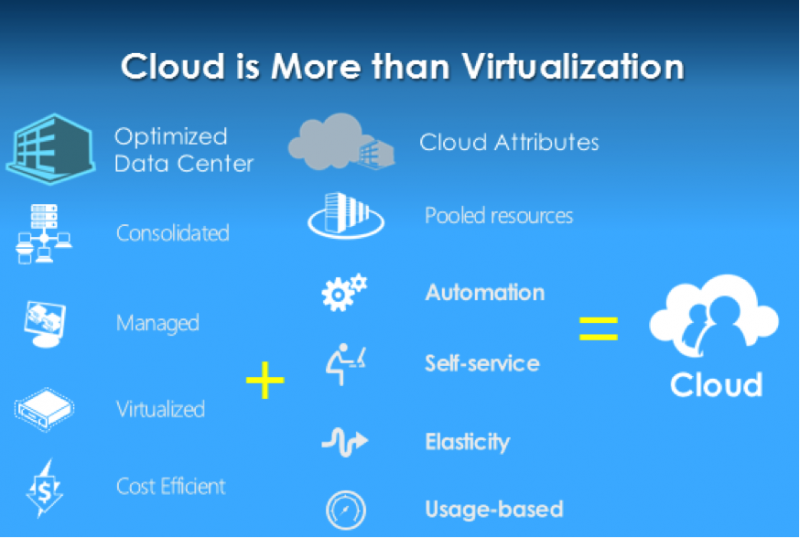

To fix the woes of Virtualization, technology has improved in the areas of optimization, orchestration (automation), elasticity (expanding and contracting automatically to the needs of the moment), inventory, management, and self-service. The Cloud is a fully automated Virtualization system. I’ll go deeper into each of these Cloud attributes in an upcoming part of this series.

This may be a lot to take in, but you can see that mere Virtualization has evolved into something more. I liken it to what I call the “Grandparent Button.” Whenever my wife and I take a trip together, our parents stay at our house to take care of our kids. Both my wife and I are comfortable with technology so we have all the latest and greatest gadgets in the house. Unfortunately, those same gadgets tend to confound our parents. So, instead of utilizing our appliances to do something simple as preparing dinner, they’ll take the kids out to a restaurant after an hour of frustration trying to get our gadgets to work for them. Because of that, I’ve always idealized that every new appliance or gadget should have a button clearly manufactured just for these types of situations. The “Grandparent Button” would understand the user’s intent and just automate the process.

The Cloud is the Grandparent Button for Virtualization. Using the correct technologies, the Cloud can be configured to work efficiently, in an automated manner, to provide for the intent of the organization. The new technologies that supply this function, are built around specific processes invented to automate the Virtualized datacenter through organized workflows.

Imagine if your datacenter was configured so that the actual end-user could go to a web page and request access to an application or service, and in the background, a VM would spin-up and give the end-users admittance almost immediately. When the project was completed, the VM would spin back down, release resources back to the server, and wait for another request. If more than one person needed access to that application or service, the VM would automatically draw the resources it needed, expanding to accommodate the volume, and then contract when those resources were no longer needed. In this way, it’s not hard to understand how valuable this really is. The IT group merely needs to setup everything up in the background and make the resources available, and then the end-users can serve themselves.

One of my favorite representations of how this all fits together is shown in the following image. I use this image regularly in presentations and webinars to help audiences effectively visualize what the Cloud really is.

Summary

So, the ultimate definition of the Cloud is that it’s a highly virtualized, hardware consolidated, optimized, cost efficient, automated, and fully managed datacenter. The true beauty of this is that these same technologies and processes can run in your datacenter. Your organization can take full advantage of these precepts to build a cost-effective environment that meets and exceeds requirements.

In part two of this series, we’ll delve into the types of Clouds (Public, Private, and Hybrid), allowing you to understand each model so you can apply them against your Cloud initiatives to determine which one, or combination, makes the most sense for your organization. There’s value in each type, but each also represents its own set of disadvantages. Clearly, the Cloud is important and it’s ever evolving. Getting a good handle on it now will ensure success for the future.

_____________

To get connected and stay up-to-date with similar content from American City & County:

Like us on Facebook

Follow us on Twitter

Watch us on Youtube